The Pain of Automated Testing

I’ve been writing code for a living for over a decade. I have seen some projects with good code (clear responsibilities, high cohesion, low coupling, easy to change, and pleasant to read) and many with bad code (nobody wants to touch).

Like marriage, good ones all look alike and bad ones are bad in different ways. One of the most important characteristics to differentiate the good from the bad is multi-layer of effective and automated tests.

Most programmers know that automated tests help our team work at a sustainable pace with a manageable level of technical debt (by Lisa Crispin, co-author of Agile Testing and More Agile Testing). But why don’t we exercise such an incredible practice in so many projects?

Let’s answer these two questions first.

We have verified the code behavior in our mind while programming and doing it again in test cases is just another repetition, which we are not interested in! If given any excuse, we will happily skip this part.

How do we make ourselves love writing automated tests? My answer is Test Driven Development (TDD).

TDD is one of the eXtreme Programming (XP) practices developed by Kent Beck (author of Test Driven Development: By Example). It promotes repetitions of short coding cycles, to evolve the solutions with new requirements.

The Coding Cycle of TDD

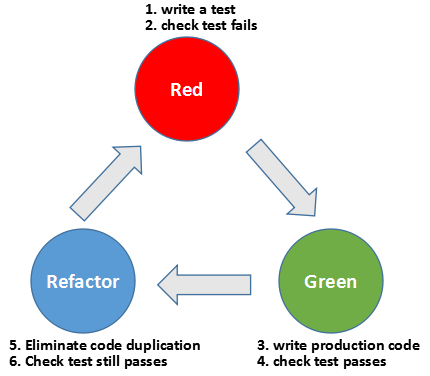

TDD has a “mantra” with 3 steps, red/green/refactor:

- Write a simplest failing test case (hence red), before writing any production code.

- Write minimal production code possible to pass the failing test case (green)

- Refactor test case or production code

This coding cycle is repeated for every scenario of requirements.

So how does TDD answer the question of programmers not liking to write automated tests? By writing a test case first before any production code, we force ourselves to design a single scenario how our production code will be used. It’s like creating a cake mold first.

Creative Commons Hello Kitty cake mold by Annette Young is licensed under CC BY 2.0

The test case guarantees that our production code written meets the expectation under the scenario. Nothing more and nothing less, if we follow the rules above of writing minimal production code to pass the failing test case only, just like baking a cake with the mold above.

Creative Commons Hello Kitty Strawberry Birthday Cake by Karen is licensed under CC BY 2.0

What if we write test cases after production code? Well, we may get something close to our expectation, over design our logic, or even slip some boundary cases.

Creative Commons Hello Kitty cake for Lydia’s fifth birthday by Anthony Kendall is licensed under CC BY 2.0 / Cropped from original

Writing test cases afterwards is like wrapping a baked cake with a cake box. We are happy as long as the cake box is large enough and it may be much bigger than what’s specified in our requirements. The fun of test design is lost.

The Benefit of TDD

Better design

As we know, tightly coupled code are hard to test. Since we always write tests before any production code, our code are always testable, meaning it is hard to write tightly coupled code with TDD.

Much less code debugging

We are working in short cycles of red/green/refactor with TDD. There won’t be much code written after the previous time our tests passed. That limits the scope of the bug we introduced and makes it very easy to spot.

No fear to break code

TDD tends to produce code with very high test coverage (90%+ is very easy to achieve), since tests are always written first. It’s much less likely to break the code accidentally with such high code coverage.

Test case as documentation

If written well, test cases are the best documentation, because they are always up to date and describe the exact scenarios on how to use the production code.

How To Start?

TDD is an easy-to-learn but hard-to-master skill. The best way to improve our skill on TDD is through practices. There are many exercises called Katas, which can help accelerate our learning process. I will use one of the simplest Kata, Calc Stats to demonstrate how to get started with TDD in my next post.